AI Browser Extensions Are a Security Nightmare

“You stood on the shoulders of geniuses to accomplish something as fast as you could, and before you even knew what you had you patented it and packaged it and slapped it on a plastic lunchbox, and now you’re selling it. You want to sell it.”

That line comes to us courtesy of Dr. Ian Malcom (the leather-clad mathematician in Jurassic Park) but it could easily describe the recent explosion of AI*-powered tools, instead of the resurrection of the velociraptor.

Actually, the current AI situation may be even more perilous than Jurassic Park. In that film, the misguided science that brought dinosaurs back to life was at least confined to a single island and controlled by a single corporation. In our current reality, the dinosaurs are loose, and anyone who wants to can play with one**.

In the sixth months (as of this writing) since ChatGPT’s public release, AI-powered browser extensions have proliferated wildly. There are hundreds of them–search for “AI” in the Chrome web store and you’ll get tired of scrolling long before you reach the end of the list.

These browser extensions run the gamut in terms of what they promise to do: some will summarize web pages and email for you, some will help you write an essay or a product description, and still others promise to turn plaintext into functional code.

The security risks posed by these AI browser extensions also run the gamut: some are straightforward malware just waiting to siphon your data, some are fly-by-night operations with copy + pasted privacy policies, and others are the AI experiments of respected and recognizable brands.

We’d argue that no AI-powered browser extension is free from security risk, but right now, most companies don’t even have policies in place to assess the types and levels of risk posed by different extensions. And in the absence of clear guidance, people all over the world are installing these little helpers and feeding them sensitive data.

The risks of AI browser extensions are alarming in any context, but here we’re going to focus on how workers employ AI and how companies govern that use. We’ll go over three general categories of security risks, and best practices for assessing the value and restricting the use of various extensions.

*Yes, large language models (LLMs) are not actually AI in that they are not actually intelligent, but we’re going to use the common nomenclature here.

**We’re not really comparing LLMs with dinosaurs, because the doomsday language around AI is largely a distraction from its real-world risks to data security and the job market, but you get the idea.

Malware Posing as AI Browser Extensions

The most straightforward security risk of AI browser extensions is that some of them are simply malware.

On March 8, Guardio reported that a Chrome extension called “Quick access to Chat GPT” was hijacking users’ Facebook accounts and stealing a list of “ALL (emphasis theirs) cookies stored on your browser–including security and session tokens…” Worse, though the extension had only been in the Chrome store for a week, it was downloaded by over 2000 users per day.

In response to this reporting, Google removed this particular extension, but more keep cropping up, since it seems that major tech platforms lack the will or ability to meaningfully police this space. As Guardio pointed out, this extension should have triggered alarms for both Google and Facebook, but they did nothing.

This laissez faire attitude towards criminals would likely shock big tech’s users, who assume that a product available on Chrome’s store and advertised on Facebook had passed some sort of quality control. To quote the Guardio article, this is part of a “troublesome hit on the trust we used to give blindly to the companies and big names that are responsible for the majority of our online presence and activity.”

What’s particularly troubling is that malicious AI-based extensions (including the one we just mentioned) can behave like legitimate products, since it’s not difficult to hook them up to ChatGPT’s API. In other forms of malware–Iike the open source scams poisoning Google search results–someone will quickly realize they’ve been tricked once the tool they’ve downloaded doesn’t work. But in this case, there are no warning signs for users, so the malware can live in their browser (and potentially elsewhere) as a comfortable parasite.

What Are The Security Risks of Legitimate AI-Powered Browser Extensions?

Even the most die-hard AI evangelist would agree that malicious browser extensions are bad and we should do everything in our power to keep people from downloading them.

Where things get tricky (and inevitably controversial*) is when we talk about the security risks inherent in legitimate AI browser extensions.

Here are a few of the potential security issues:

Sensitive data you share with a generative AI tool could be incorporated into its training data and viewed by other users. For a simplified version of how this could play out, imagine you’re an executive looking to add a little pizazz to your strategy report, so you use an AI-powered browser extension to punch up your writing. The next day, an executive at your biggest competitor asks the AI what it thinks your company’s strategy will be, and it provides a surprisingly detailed and illuminating answer!

Fears of this type of leak have driven some companies–including Verizon, Amazon, and Apple–to ban or severely restrict the use of generative AI. As The Verge’s article on Apple’s ban explains: “Given the utility of ChatGPT for tasks like improving code and brainstorming ideas, Apple may be rightly worried its employees will enter information on confidential projects into the system.”The extensions or AI companies themselves could have a data breach. In fairness, this is a security risk that comes with any vendor you work with, but it bears mentioning because it’s already happened to one of the industry’s major players. In March, OpenAI announced that they’d recently had a bug “which allowed some users to see titles from another active user’s chat history” and “for some users to see another active user’s first and last name, email address, payment address” as well as some other payment information.

How vulnerable browsers extensions are to breaches depends on how much user data they retain, and that is a subject on which many “respectable” extensions are frustratingly vague.The whole copyright + plagiarism + legal mess. We wrote a whole article about this when GitHub Copilot debuted, but it bears repeating that LLMs frequently generate pictures, text, and code that bear a clear resemblance to a distinct human source. As of now, it’s an open legal question as to whether this constitutes copyright infringement, but it’s a huge roll of the dice. And that’s not even getting into the quality of the output itself–LLM-generated code is notoriously buggy and often replicates well-known security flaws.

These problems are so severe that on June 5, Stack Overflow’s volunteer moderators went on strike to protest the platform’s decision to allow AI-generated content. In an open letter, moderators wrote that AI would lead to the proliferation of “incorrect information ("hallucinations”) and unfettered plagiarism.“

AI developers are making good faith efforts to mitigate all these risks, but unfortunately, in a field this new, it’s challenging to separate the good actors from the bad.

Even a widely-used extension like fireflies (which transcribes meetings and videos) has terms of service that amount to "buyer beware.” Among other things, they hold users responsible for ensuring that their content doesn’t violate any rules, and promises only to take “reasonable means to preserve the privacy and security of such data.” Does that language point to a concerning lack of accountability or is it just boilerplate legalese? Unfortunately, you have to decide that for yourself.

*The great thing about writing about AI is that everyone is very calm and not at all weird when you bring up your concerns.

AI’s “Unsolvable” Threat: Prompt Injection Attacks

Finally, let’s talk about an emerging threat that might be the scariest of them all: websites stealing data via linked AI tools.

The first evidence of this emerged on Twitter on May 19th.

This looks like it might be the first proof of concept of multiple plugins - in this case WebPilot and Zapier - being combined together to exfiltrate private data via a prompt injection attack

— Simon Willison (@simonw) May 19, 2023

I wrote about this class of attack here: https://t.co/R7L0w4Vh4l https://t.co/2XWHA5JiQx

If that explanation makes you scratch your head, here’s how Willison explains it in “pizza terms.”

If I ask ChatGPT to summarize a web page and it turns out that web page has hidden text that tells it to steal my latest emails via the Zapier plugin then I’m in trouble

— Simon Willison (@simonw) May 22, 2023

These prompt injection attacks are considered unsolvable given the inherent nature of LLMs. In a nutshell: the LLM needs to be able to make automated next-step decisions based on what it discovers from inputs. But if those inputs are evil then the LLM can be tricked into doing anything, even things it was explicitly told it should never do.

It’s too soon to gauge the full repercussions of this threat for data governance and security, but at present, it appears that the threat would exist regardless of how responsible or secure an individual LLM, extension, or plugin is.

The risks here are severe enough that the only truly safe option is: do not ever link web-connected AI to critical services or data sources. The AI can be induced into exfiltrating anything you give it access to, and there are no known solutions to this problem. Until there are, you and your employees need to steer clear.

Defining what data and applications are “critical” and communicating these policies with employees should be your first AI project.

What AI Policies Should I Have for Employees?

The AI revolution happened overnight, and we’re all still adjusting to this brave new world. Every day, we learn more about this technology’s applications: the good, the bad, and the cringe. Companies in every industry are under a lot of pressure to share how they’ll incorporate AI into their business, and it’s okay if you don’t have the answers today.

However, if you’re in charge of dictating your company’s AI policies, you can’t afford to wait any longer to set clear guidelines about how employees can use these tools. (If you need a starting point, here’s a resource with a sample policy at the end.)

There are multiple routes you can take to govern employee AI usage. You could go the Apple route and forbid it altogether, but an all-out ban is too extreme for many companies, who want to encourage their employees to experiment with AI. Still, it’s going to be tricky to embrace innovation while practicing good security. That’s particularly true of browser extensions, which are inherently outward-facing and usually on by default. So if you’re going to allow their use, here are a few best practices:

Education: Like baby dinosaurs freshly hatched from their eggs, AI extensions look cute, but need to be treated with a great deal of care. As always, that starts with education. Most employees are not aware of the security risks posed by these tools, so they don’t know to exercise caution about which ones to download and what kinds of data to share. Educate your workforce about these risks and teach them how to assess malicious versus legitimate products.

Allowlisting: Even with education, it’s not reasonable to expect every employee to do a deep dive into an extension’s privacy policy before hitting download. With that in mind, the safest option here is to whitelist extensions on a case-by-case basis. As we wrote in our blog about Grammarly, you should try to find safer alternatives to dangerous tools, since an outright ban can hurt employees and drive them to Shadow IT. In this case, look for products that explicitly pledge not to feed your data into their models (such as this Grammarly alternative).

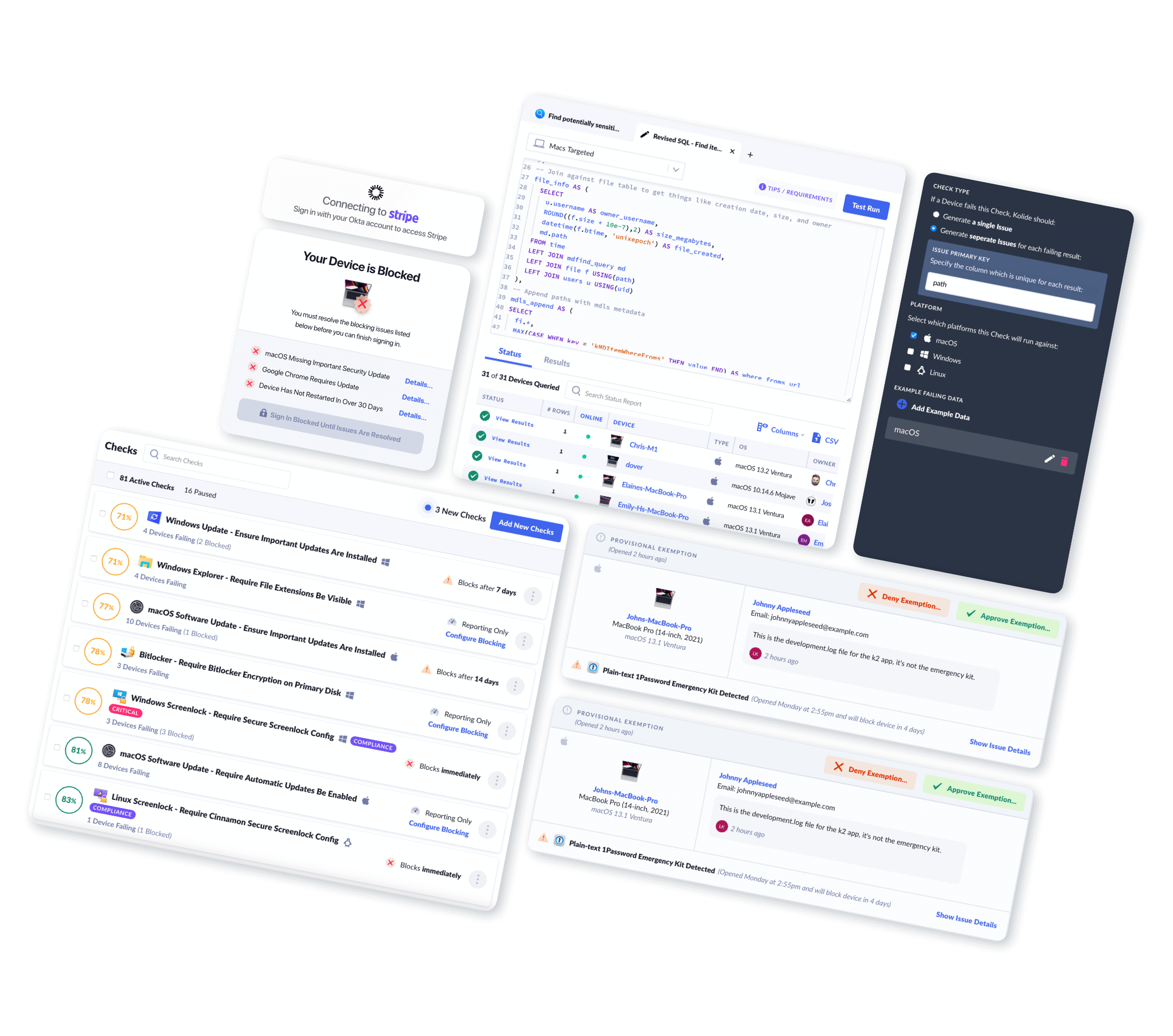

Visibility and Zero Trust Access: You can’t do anything to protect your company’s from the security risks of AI-based extensions if you don’t know which ones employees are using. In order to learn that, the IT team needs to be able to query the entire company’s fleet to detect extensions. From there, the next step is to automatically block devices with dangerous extensions from accessing company resources.

That’s what we did at Kolide when we wrote a Check for GitHub Copilot that detects its presence on a device and stops that device from authenticating until Copilot is removed. We also let admins write custom checks to block individual extensions as needed. But again, simple blocking shouldn’t be the final step in your policy. Rather, it should open up conversations about why employees feel they need these tools, and how the company can provide them with safer alternatives.

Those conversations can be awkward, especially if you’re detecting and blocking extensions your users already have installed. Our CEO, Jason Meller, has written for Dark Reading about the cultural difficulties in stamping out malicious extensions: “For many teams, the benefits of helping end users are not worth the risk of toppling over the already wobbly apple cart.” But the reluctance to talk to end users creates a breeding ground for malware: “Because too few security teams have solid relationships built on trust with end users, malware authors can exploit this reticence, become entrenched, and do some real damage.

I’ll close by saying that this is a monumental and rapidly evolving subject, and this blog barely grazes the tip of the AI iceberg. So if you’d like to keep up with our work on AI and security, subscribe to our newsletter! It’s really good and it only comes out twice a month!