89% of Workers Use AI–Far Fewer Understand the Risks

In late November 2022, OpenAI released ChatGPT to the public and launched the AI revolution. A few days later, Kolide’s CEO took to our company’s Slack and urged us to try out this new “society changing technology.”

Around the world, bosses were telling their employees the same thing: start playing with generative AI and figure out how it can make you (and us) faster, smarter, better. Less than a year later, that advice has taken hold to an astonishing degree.

Kolide’s survey of over 300 knowledge workers found that 89% use some form of AI to do their jobs at least once a month. (And when we say “AI,” we’re talking about generative AI tools built around LLMs and foundational models, not other forms of machine learning.)

This single data point tells a compelling story on its own. Just compare AI’s rate of adoption in a single year with email, which took decades to achieve this level of saturation.

But the story of employee AI use is even more interesting and complex when we put it in the context of AI’s risks. The survey found that the same businesses that encourage (or ignore) their employees’ AI use aren’t training them on how to use it safely.

Forrester’s 2024 AI Predictions Report anticipates that “shadow AI will spread as organizations struggle to keep up with employee demand, introducing rampant regulatory, privacy, and security issues.” Our own findings echo these concerns, but they also point to how companies can get ahead of these issues before AI-generated work has thoroughly and invisibly infiltrated their entire organization.

The Risks of AI in the Workplace: A Brief Overview

Before we dive deeper into the survey data, let’s briefly define what we mean by the risks of AI-based applications.

Firstly, we are not talking about the “existential” risks that AI founders and boosters are fond of warning us about. It is highly unlikely that anyone reading this article will unleash nuclear war or the next pandemic via a chatbot.

We’re also not talking about the danger that AI will replace human labor, and that the greatest risk workers take when they experiment with AI is that they will experiment themselves out of a job.

In this case, we’re concerned with the types of risks that AI confers on a business, which can create legal, reputational, and financial blowback. Here are a few examples of such risks.

AI errors

LLMs are famously, and perhaps inherently, prone to making errors, in which they either fabricate information or recycle incorrect training data. (These types of errors are often described with the umbrella term “hallucinations,” although that term is somewhat problematic because it anthropomorphizes software and draws attention away from the companies responsible for creating it.)

We could write a whole blog post of cautionary tales of AI, but a few examples include the lawyer who submitted a brief that cited fictitious case law, the news outlet that printed error-riddled stories, and the eating disorder hotline chatbot that advised callers to lose weight. The dangers here are so significant that Forrester predicts insurance providers will soon offer “AI hallucination insurance” to companies, though we’ll see if that proves to be a more viable business than cybersecurity insurance.

To be sure, LLMs can offer real value to workers; our own Jason Meller has written eloquently about how they give him a starting point to untangle complex coding problems. But, because AI tools deliver falsehoods with the same confidence as fact, their work needs to be closely reviewed by someone with enough expertise to spot errors. (And this, plainly, is not possible if you are unaware of if or how your company’s employees are using AI.)

Finally, we should mention the potential for LLMs to draw conclusions that are not strictly “errors,” but which perpetuate societal biases. This is a major concern to legislators; Rep. Yvette Clark of New York recently said that “bias in AI will be the civil rights issue of our time.” If your HR department is using AI-enabled tools to screen resumés, for example, you may be obligated to report that to applicants under new laws.

AI plagiarism

Despite the name, generative AI is not capable of generating any original output, only drawing from and remixing its training data (which isn’t to suggest that its products aren’t often useful or entertaining).

As yet, there’s no clear consensus about whether an AI’s scraping and rejiggering of existing content constitutes plagiarism and/or copyright violation. There are multiple lawsuits wending their way through the courts to decide this question, with litigants ranging from authors to comedians to developers. In the case of code, we’ve written extensively about developers who allege that GitHub Copilot has lifted their work in violation of open source licenses.

Obviously, no company wants a copyright lawsuit lurking in their codebase or marketing materials, but the risks here aren’t merely legal. Even if AI companies win these cases or discover a way of properly citing their sources, there are consequences to disseminating work that is fundamentally unoriginal. That might not matter for internal use cases, likeAI punching up an email or helping design a spreadsheet. But if we’re talking about public-facing content, then you risk cheapening your brand with poor-quality content, and potentially being punished on the SEO front for failing to deliver value.

AI security risks

The final category of AI risk we’ll touch on is their potential to enable data breaches and hacks.

For one thing, AI-generated code has been found to contain known vulnerabilities and other security flaws. For another, there has been an explosion of malware masquerading as AI, particularly in the Wild West of browser extensions.

Many companies are also concerned that AI tools will siphon up their trade secrets to use as training data, although this feature can be disabled for some tools. Lastly, bad actors can jailbreak LLMs and trick them into taking malicious actions, such as exfiltrating a user’s data without their knowledge or consent.

AI in the Workplace Is Welcomed, but Poorly Understood

In the Shadow IT Report, we were interested in employee AI use as an example of how companies respond to new technologies and design policies to govern their use. We polled workers at every level of the corporate ladder–from the ground floor to the C-Suite–and with varying levels of technical expertise, though all respondents use computers for the bulk of their work.

In particular, we were curious about:

- How many companies allowed AI use, and whether more employees used AI than were allowed to do so.

- How well companies educated their employees about AI’s risks or put guardrails around which tools they could use.

- Whether workers had an accurate understanding of how their colleagues were using AI.

Let’s take our findings in order.

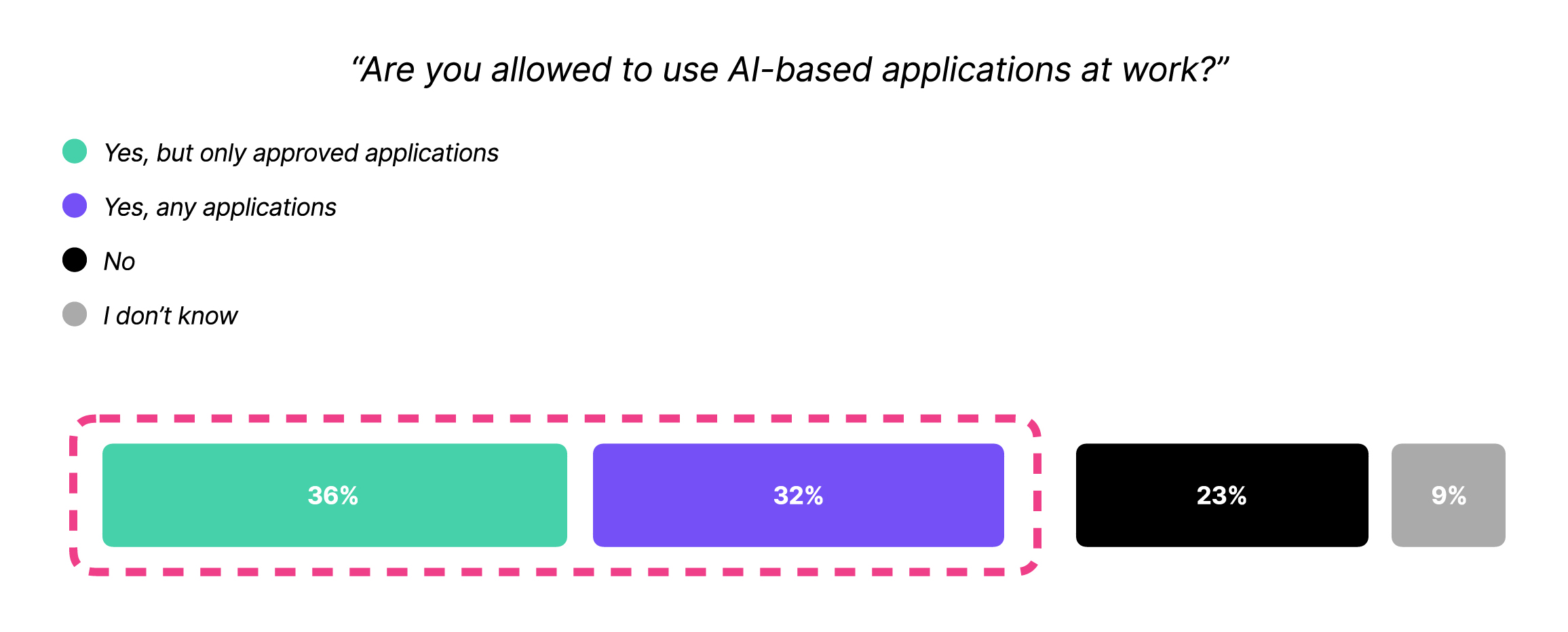

68% of companies allow AI use

There’s a significant discrepancy between the 68% of respondents who say they’re allowed to use AI for work and the 89% who say they do use it. That gap means that AI-generated text, images, and code are finding their way into our work output invisibly, without receiving extra scrutiny.

It’s also important to acknowledge the huge difference between the 36% of companies that only allow approved AI applications, and the 34% that have an unrestricted AI policy. Given the proliferation of AI tools with dubious data privacy and security policies, not to mention those that are simply malware disguised as AI, it’s worrisome that so many companies have no restrictions.

Meanwhile, nearly a quarter of companies ban employee use of AI outright, putting them in the ranks of Samsung, Spotify, Apple, and other corporations concerned about data privacy and security.

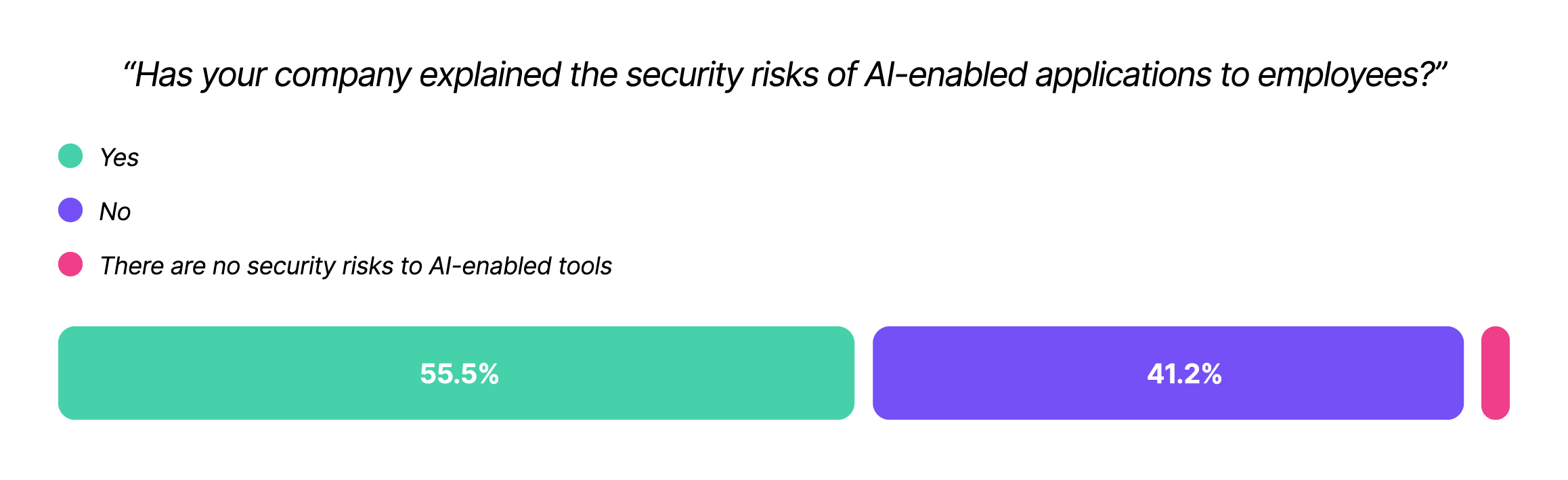

Only 56% of companies educate workers on the risks of AI

Again, we see a discrepancy between the 68% of respondents allowed to use AI, and the 56% who have been educated about how to use it responsibly.

We can attribute this lack of education to a few factors. For one thing, “the risks of AI” are hotly debated, even among experts in the field, so leaders at the average company may not have a clear sense of what they are. Also, many companies (regrettably) treat employee security training as little more than a check-box exercise, so they have neither the tools nor culture to respond to emerging threats. Another of the survey’s findings is that the average company (41%) only provides security training once a year, so some employees may not have been trained since before the current crop of LLMs even appeared.

In an effort to supplement our survey findings, we put out a call for quotes on AI in the workplace. We received over 100 responses, and disconcertingly, though unsurprisingly, many of them had clearly been written by AI. However most humans agreed that they had concerns over the ethical and privacy implications of AI, but that their workplace did not have a formal policy around it. To quote one respondent, “there’s a general understanding that if AI helps get the job done better, why not?”

The last reason that companies fail to take employee AI use seriously is that they simply aren’t aware it’s happening.

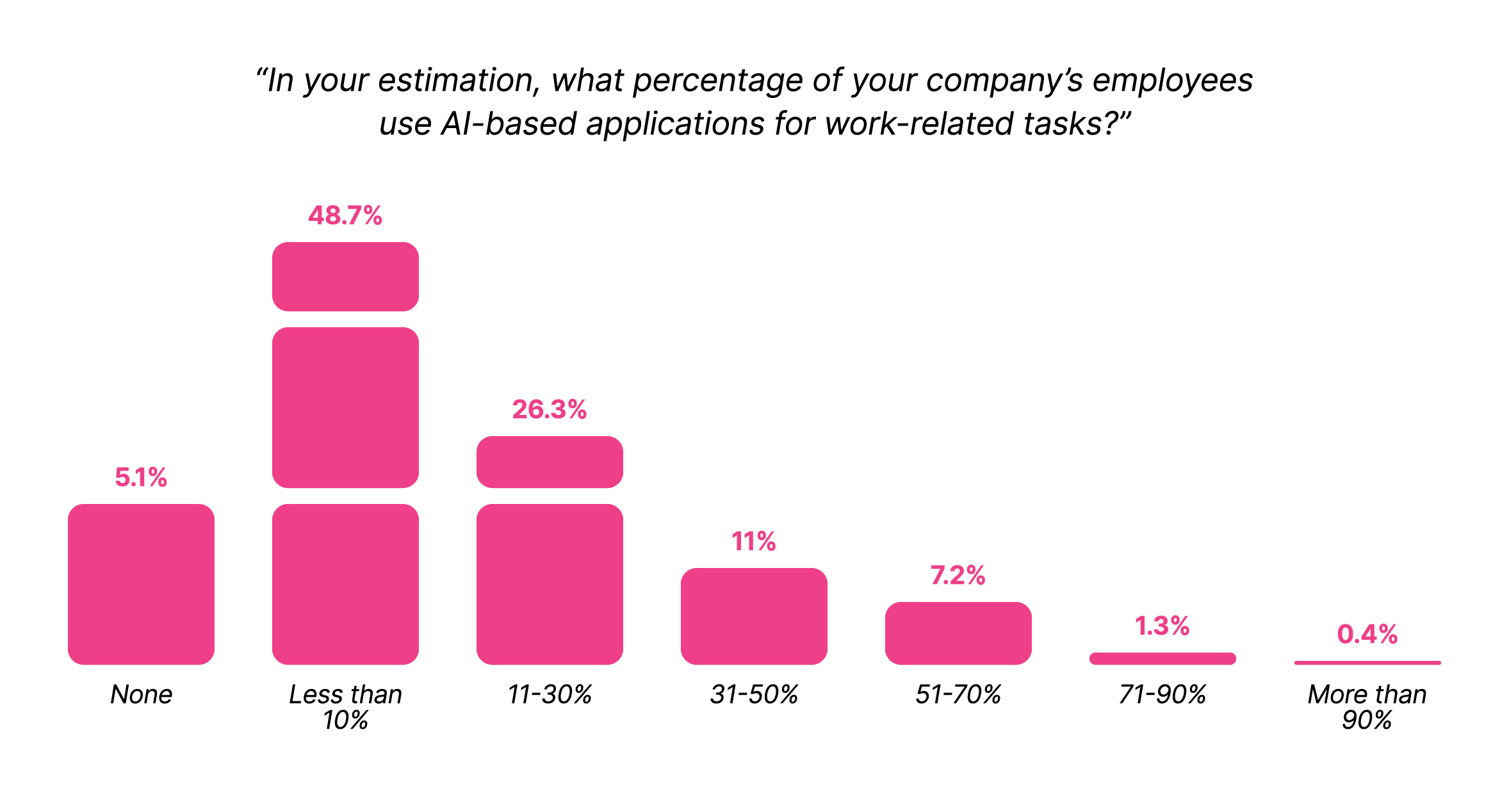

Workers badly underestimate how much their colleagues use AI

This was the most surprising result in our survey. Despite the fact that most workers use AI, and most companies permit it, the average worker assumes that they’re the only one using it.

Roughly half of respondents said that fewer than 10% of their colleagues use AI. Only 1% of respondents correctly guessed that the true number is closer to 90%.

How can we account for this wild disconnect between our perceptions of how workers use AI and the reality? It might just be the familiar mix of smugness and embarrassment we all feel when we think we’ve discovered a shortcut no one else is using–like sneaking snacks into the movies or twiddling one’s mouse instead of working*.

Regardless of why we’re getting this so wrong, the lack of awareness is creating a dangerous environment. Companies are failing to regulate worker AI usage because they don’t think they need to. That needs to change.

*Not that this writer has ever, or would ever, do such a thing.

You Need an AI Acceptable Use Policy Now

There are legitimately exciting and useful applications for generative AI tools, but the only way those benefits can outweigh the risks is by creating policies to mandate responsible AI use.

What should the goals of these policies be? For us, it was the following:

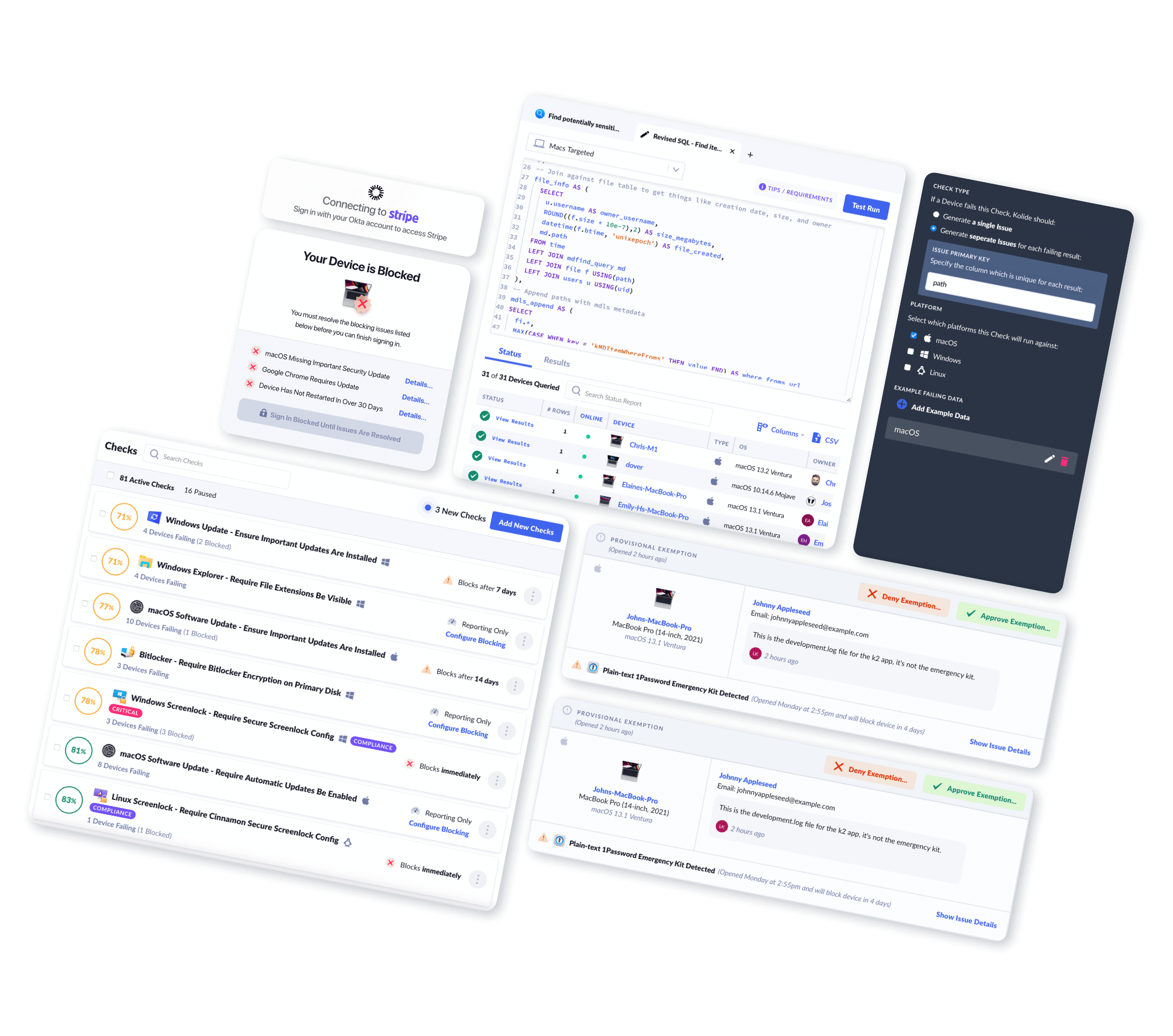

- Getting visibility into worker AI use

- Preventing the most unsafe AI uses

- Establishing an acceptable use policy for AI

This is the process we’ve gone through, and continue to go through, to come up with norms and expectations on AI use at Kolide.

Get visibility into worker AI use

There’s a reason Kolide’s survey data on AI in the workplace is a part of our Shadow IT report; if workers are using AI without the company’s knowledge or supervision, then it should be considered a form of Shadow IT. But, as we’ve written before, you can’t simply issue a blanket ban on any unapproved piece of technology and consider the problem solved. Workers will go around your ban if they think they need a tool to do their job.

Any attempt to get visibility has to come in the form of a non-judgmental conversation, seeking to understand how AI workers are currently using AI and what they’re getting out of it. Even if this process uncovers some undesirable tools in use, that should be taken as an opportunity to educate users on risks and provide safer alternatives.

Prevent the riskiest forms of AI

Given the risks we’ve just been over, you likely don’t want workers at your company to plug your customers’ sensitive data into an unvetted AI tool they downloaded off the internet. You may also want to block unapproved browser extensions, or forbid the use of coding tools like Copilot altogether.

What you choose to allow vs block depends on your personal risk tolerance, but regardless, a block has to be enforceable in order to work. In other words, if you block an AI tool on all company-managed devices, but a worker can access the same data on their personal computer, you haven’t solved the problem, you’ve just put it out of sight. (We talk more about this issue in the Shadow IT report and our blog post on the subject.)

Collaborate on an acceptable use policy for AI tools

In all likelihood, what you’ll really need are two policies: one formal, legalistic document, and one that provides day-to-day guidelines. You might need a lawyer to write the first one, but to create the second, get input from stakeholders across multiple departments.

Now, “get input from stakeholders across multiple departments” is probably the most-given and most-ignored piece of advice in business, but it really is a crucial step if you want a policy that works. In Kolide’s case, we quickly learned that marketing, operations, and engineering all had different needs, so we adapted the policy accordingly.

The result was an acceptable use policy that goes over:

- Restricting sensitive data we expose to AI tools

- Disabling training features

- Being aware of and avoiding biases

- Labeling work significantly augmented by AI

- A list of company-approved AI tools

- An invitation to share other tools with the team

The goal of our policy was to create an environment where employees neither feel pressured to use AI nor punished for doing so. Your organization may have different goals, but what matters is being intentional and explicit about what they are. That’s the best and only way to drive AI out of the shadows.

Download Kolide’s Shadow IT Report

If you found this article interesting, we invite you to download our full report, which includes even more data on AI and other security topics.