GitHub Copilot Isn't Worth the Risk

On November 3rd programmer and lawyer Matthew Butterick, along with the Joseph Saveri Law Firm, filed a class action lawsuit against GitHub, Microsoft (its parent company), and OpenAI.

The lawsuit, filed “on behalf of a proposed class of possibly millions of GitHub users,” is directed at GitHub Copilot, an AI-powered tool that works as a sophisticated autocomplete for developers. Developers who install Copilot as an IDE extension can enter natural language prompts and Copilot will respond with code suggestions in dozens of programming languages.

GitHub CEO Thomas Dohmke has touted Copilot’s ability to eliminate up to 40% of a developer’s workload by making suggestions for boilerplate code, thus saving developers tedious hours of research and trial-and-error.

But Butterick and other Copilot critics charge that many of Copilot’s suggestions aren’t “boilerplate” at all; they bear the unmistakable fingerprints of their original authors, because Copilot was “trained” on GitHub’s repositories of public and open source code.

This lawsuit–and the larger debate over Copilot–raises a myriad of issues: technological, legal, ethical, even existential.

For example:

What does it mean for an AI to “learn?”

Are AI tools like Copilot ushering in a new era of innovation, or merely eliminating human labor and creativity?

Is code more analogous to art or to mathematics? And does the answer impact how it should be treated under the law?

These questions are endlessly debatable and we’ll touch on each of them in this article.

But there’s a more urgent question that CTOs need to answer now: Should I allow Copilot at my company? You don’t have much time to mull over your decision, since GitHub just announced that Copilot For Business will debut in December. Your engineers may already be asking you to purchase licenses for them.

As you can see from the title, we tend to think you’re better off saying no, at least while there are so many murky legal issues at play.

Here, we’ll go over the case for and against Copilot, and how you can detect whether it’s already in use at your organization.

The Legal Risks of Copilot

Butterick has written at length about (what he perceives to be) Copilot’s existential threat to the open-source community, but his legal case comes down to the much more straightforward matter of copyright violation. Butterick et al allege that Copilot’s code suggestions are lifted from open-source software, and by failing to identify or attribute the original work, it violates open-source licenses.

The implication, obviously, is that organizations who use Copilot are subject to the same risk.

The 60-second guide to open source licenses

Open source software licenses are not a monolith, and different licenses impose different restrictions on how developers can reuse code. “Permissive” licenses, such as the MIT and Apache Licenses, allow developers to modify and distribute code as they see fit. On the other end of the spectrum, “copyleft” licenses such as GPL require any re-use to maintain the original terms of the license.

There are other important differentiators between open source licenses, but for our purposes, what matters most is what they all have in common: they all require developers to provide attribution by including the original copyright notice. This ensures (among other things) that software doesn’t contain incompatible licenses. As Butterick explains, one can’t create software with an MIT license using GPL-licensed code, because “I can’t pass along to others permissions I never had in the first place.”

Copilot strips code of its licenses, so developers who use it run the risk that they are unwittingly violating copyright. That puts companies at risk of lawsuits, particularly from open-source advocacy groups like the Software Freedom Conservancy (SFC).

Copilot Competitors

For the record, Copilot is not the only tool that auto-completes code for developers. But its approach to licenses makes it particularly risky. Tabnine, for instance, is trained only on permissive licenses.

Copilot’s competitors also have a reputation for truly sticking to “boilerplate” suggestions. They don’t seem to share Copilot’s ambitious goals for writing nearly half of a developer’s code, so they’re less likely to suggest complex logic that can be traced back to a copyrighted source. Nevertheless, all AI-powered “pair programmers” introduce a certain amount of risk, and we’re not here to make the case that Copilot is exponentially more dangerous than the others.

Is Copilot breaking copyright law?

There’s no simple answer to this question because there are no perfect analogues to the Copilot case. Still, some arguments are easier to dismiss than others.

Some Copilot defenders maintain that the program’s suggestions are so obvious and generic that they’re not really copyrightable. But GitHub’s own website states that “about 1% of the time, a suggestion may contain some code snippets longer than ~150 characters that matches the training set.”

The class action complaint alleges that even code shorter than 150 characters can still constitute copyright violations, but that “even using GitHub’s own metric and the most conservative possible criteria, Copilot has violated the DMCA at least tens of thousands of times.”

There has not yet been a tidal wave of outrage from developers finding their work on Copilot, but complaints are beginning to surface. In a now-famous Twitter thread, Professor Tim Davis objected to Copilot churning out large chunks of his copyrighted code.

@github copilot, with “public code” blocked, emits large chunks of my copyrighted code, with no attribution, no LGPL license. For example, the simple prompt “sparse matrix transpose, cs” produces my cstranspose in CSparse. My code on left, github on right. Not OK. pic.twitter.com/sqpOThi8nf

— Tim Davis (@DocSparse) October 16, 2022

Butterick et al’s lawsuit lists other examples, including code that bears significant similarities to sample code from the books Mastering JS and Think JavaScript. The complaint also notes that, in regurgitating commonly-used code, Copilot reproduces common mistakes, so its suggestions are often buggy and inefficient. The plaintiffs allege that this proves Copilot is not “writing” in any meaningful way–it’s merely copying the code it has encountered most often.

So far, Microsoft’s defense seems to hang on the idea of “fair use.” Fair use exempts creators from copyright claims provided their work is sufficiently transformative. A musician who parodies another artist’s song, for example, meets the definition of fair use. But it’s far from clear whether that understanding would apply to Copilot.

Some point to the precedent of Google v Oracle, in which the Supreme Court ruled that Google was within its rights to use Java APIs to build Android’s OS. But APIs are fundamentally built to facilitate communication between programs, which is a much narrower use case than the breadth of code at issue here.

Another case that may shed some light on the legality of Copilot doesn’t concern tech at all. Andy Warhol Foundation v Goldsmith concerns a series of portraits by the late painter that were based on the work of photographer Lynn Goldsmith. SCOTUS recently heard arguments in that case, but whatever the court ultimately decides, it’s still an imperfect comparison to Copilot, since code is neither precisely art nor precisely science. Some functions and logic really are a matter of straightforward mathematics, while others are idiosyncratic; there’s no one rule that describes every situation.

Michael Weinberg wrote a blog post analyzing the claims for and against Copilot. He concludes that even if the fair use argument falls through, GitHub could fall back on its terms of service to justify taking code from any repo. However, he also notes that this might be a defense of last resort, since it could trigger a backlash from GitHub users.

The Other Drawbacks of Copilot

CTOs might be willing to run the risk of copyleft violations if Copilot was an otherwise irresistible product. (After all, cutting 40% of your developer salaries will pay for a lot of lawyers.) But if you’re still on the fence, there are at least two other factors that might help make up your mind.

Security

Experts have reported that Copilot often suggests code with security flaws. In one study, researchers produced 1,689 programs with Copilot, of which 40% were vulnerable to attack. Granted, this study was conducted while the tool was still in beta, but even now, GitHub is upfront about the fact that it takes no responsibility for the quality of its code.

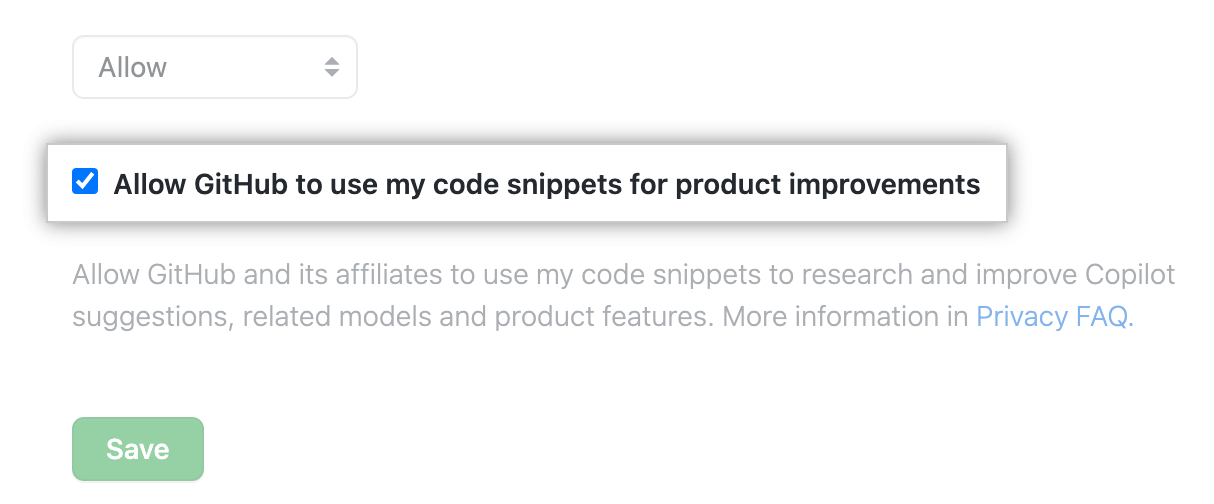

On top of this, one under-discussed security concern is that Copilot is a keylogger. Unlike Tabnine, which developers can choose to run locally, Copilot can only function in the cloud. In fairness, Copilot does allow users to disable telemetry by opting out in GitHub’s “settings” tab.

But there are reasons to doubt whether the opt-out process works as promised. Here’s why: users can also block Copilot from making suggestions that match public code. However, Tim Davis, whose Twitter thread we referenced before, reported that he had taken both those steps, and still had his own code fed back to him.

When I signed up, I disabled the “Allow Github to use my code..” option. Also note that “suggestions matching public code” is “blocked”. Same result … @github emits my LGPL code verbatim, with no license stated and no copyright. pic.twitter.com/viKbqym2eq

— Tim Davis (@DocSparse) October 16, 2022

Usefulness

According to GitHub’s own FAQ page, “we found that users accepted on average 26% of all completions shown by GitHub Copilot.” Butterick’s assessment was more blunt: “Copilot essentially tasks you with correcting a 12-year-old’s homework, over and over.”

Other developers have found the tool more valuable in cutting down their code-related Google searches. Michael Weinberg is pretty close to the mark in describing the current version of Copilot as “a tool that replaces googling for stack overflow answers.” That certainly has value, but not yet the kind of value that will let organizations see meaningful changes to their engineering budget.

One relevant–if difficult to quantify–factor is that providing the necessary oversight of Copilot’s code could wipe out whatever productivity gains it offers by slowing down the code review process. Our CEO cited this as the most significant factor in choosing to disallow Copilot at Kolide.

As a code reviewer there is no way for me to know what code the employee I hired wrote vs the code the AI bot wrote. Knowing who wrote the code absolutely informs the amount of scrutiny I provide. For example, an engineer that has proven day in and day out that they can produce high-quality code is scrutinized less during review than Junior engineer that we just hired.

Without knowing who truly came up with 25% of any given code submitted in a PR, it short circuits my ability as a reviewer to use high-level heuristics to evaluate code and forces me to look at every line of code assuming the AI may have written it. Since AI’s are generally “mostly correct, and then suddenly and inexplicably catastrophically wrong,” I believe code written by AI deserves the most scrutiny of all.

To be fair, GitHub seems to be listening to these concerns. In a November blog post, they announced that in forthcoming releases of Copilot, any code suggestion would come with an inventory of similar code and let developers organize that inventory according to its license (among other variables).

They write:

Using this information, a developer might find inspiration from other codebases, discover documentation, and almost certainly gain confidence that this fragment is appropriate to use in their project. They might take a dependency, provide attribution where appropriate, or possibly even pursue another implementation strategy.

This is a step in the right direction, but even so, giving developers the ability to provide attribution is not the same thing as including it automatically. We’ll have to see what this capability looks like in action (sometime in 2023) before we know how well it addresses its critics’ concerns.

What To Do About Copilot at Your Organization

For a lot of companies, the risk of putting out GPL-tainted code is enough to scare them away from Copilot. In fact, prior to launching his lawsuit, Butterick himself wrote that he “wasn’t worried about its effects on open source” because software developers would inevitably ban it.

As our CEO says, “as someone who used to work on M&A deals, we would often give them a 20-30% haircut on price or even walk away completely if we felt there was undue risk of copyleft pollution.” With that in mind, forbidding the use of Copilot is the safest–if most conservative–option for the time being.

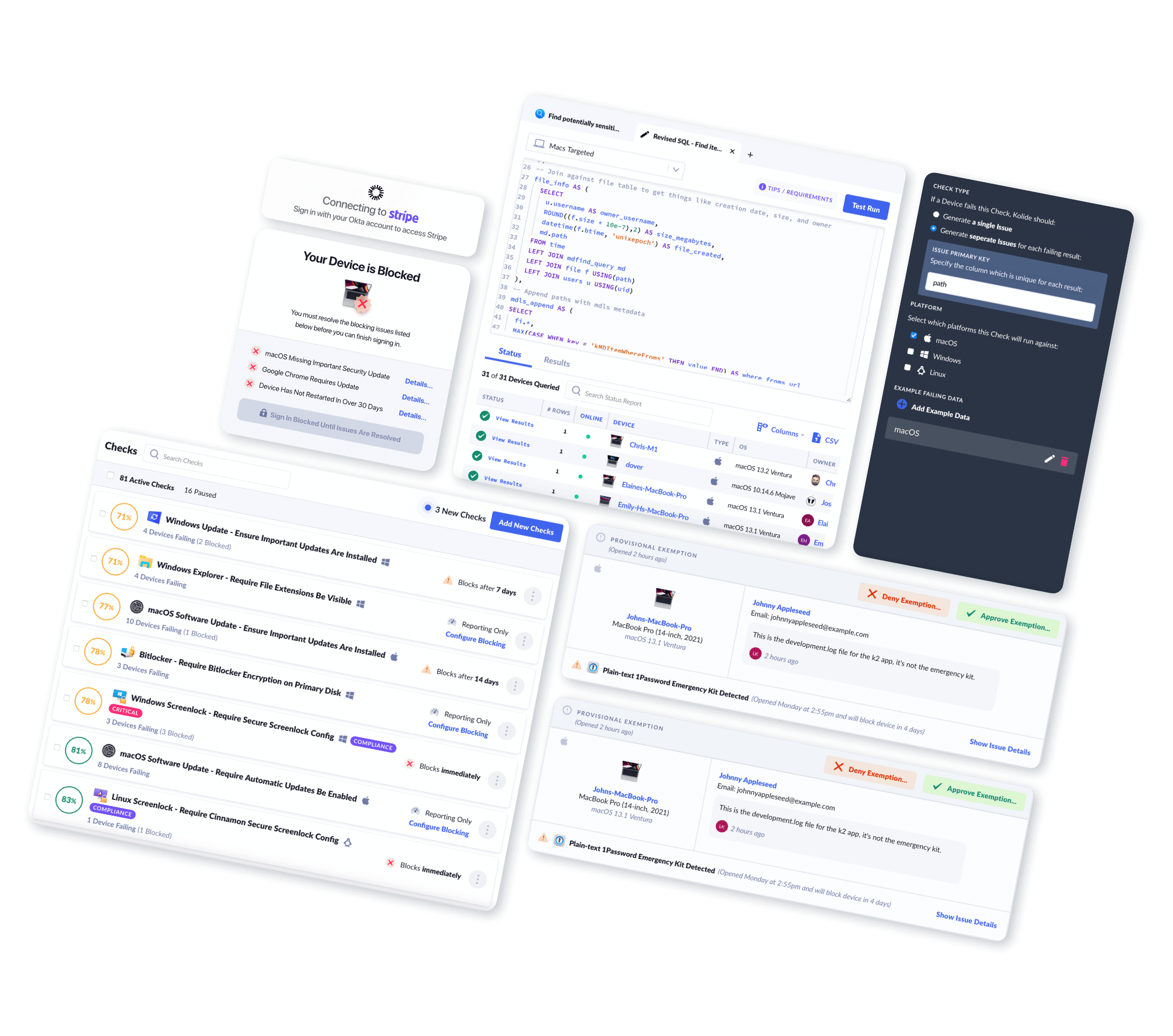

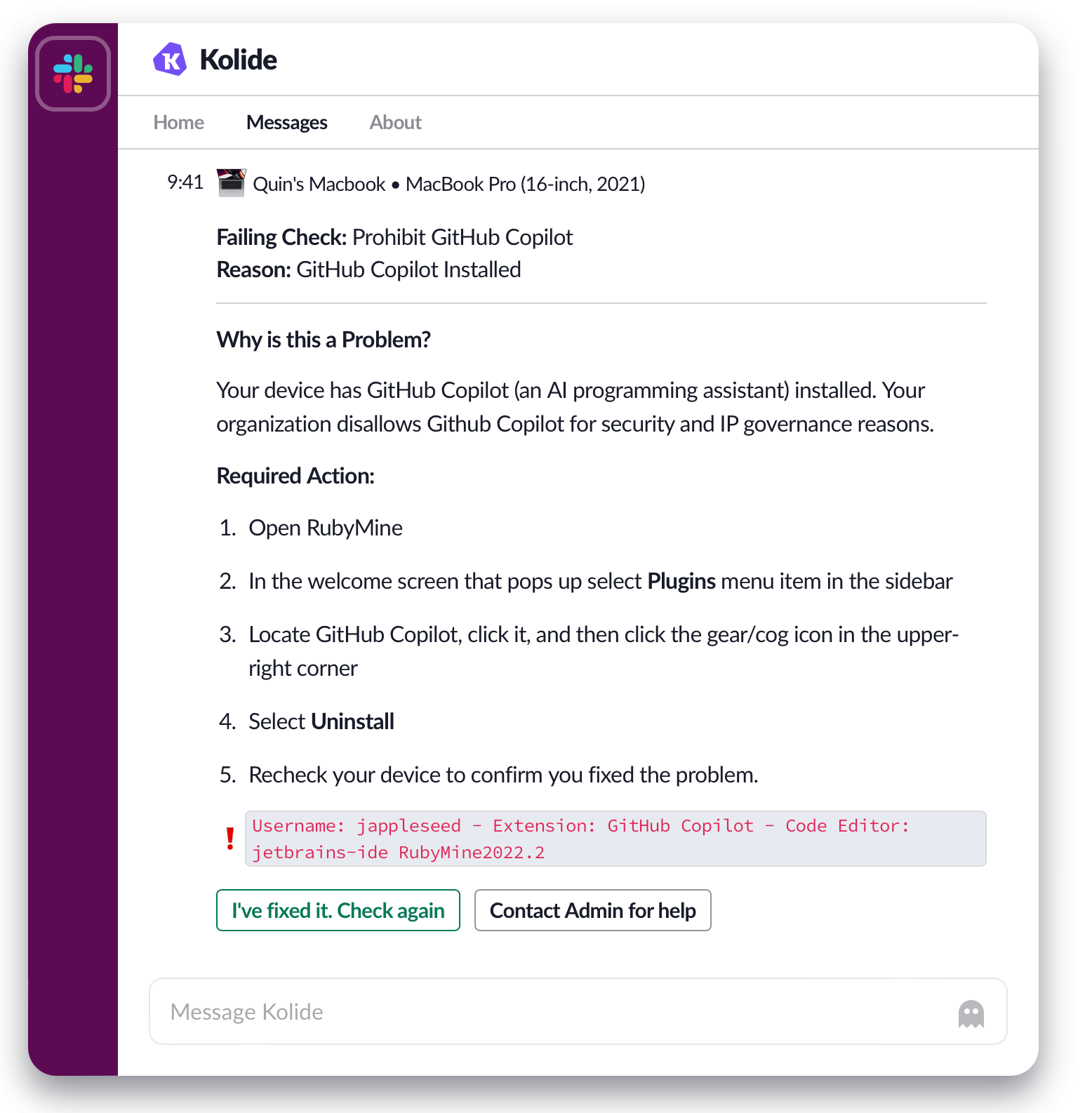

At Kolide, we just released a Check that detects the presence of Copilot on a device, and instructs users to uninstall it. Read our use-case page on that Check to learn more about how it works.

Should you choose to allow Copilot, we advise you to take the following precautions:

- Disable telemetry

- Block public code suggestions

- Thoroughly test all Copilot code

- Run projects through license checking tools that analyze code for plagiarism

GitHub Copilot Is Charting Unknown Territory

You could write a book (or a book-length Reddit comment) on all the issues Copilot brings up. If nothing else, it’s opened up a fierce debate between AI evangelists and open-source purists.

David Heinemeier Hansson, creator of Ruby on Rails, argues that the backlash against Copilot runs contrary to the whole spirit of open source. Copilot is “exactly the kind of collaborative, innovative breakthrough that I’m thrilled to see any open source code that I put into the world used to enable,” he writes. “Isn’t this partly why we share our code to begin with? To enable others to remix, reuse, and regenerate with?”

While Hansson may be motivated by that sense of expansive generosity, he can’t be said to speak for the open source community as a whole. A developer who wrote a program to find lost pets may have a legitimate objection to their code being used in a missile guidance system.

On the other hand, a legal victory against GitHub, Microsoft, and OpenAI could have unintended side effects that ripple across the whole field of generative AI.

However you feel about it, it does seem that something like Copilot is inevitable. The best case scenario might just be a version that doesn’t try to divorce code from the humans writing it, in an attempt to become something more like an Autopilot.